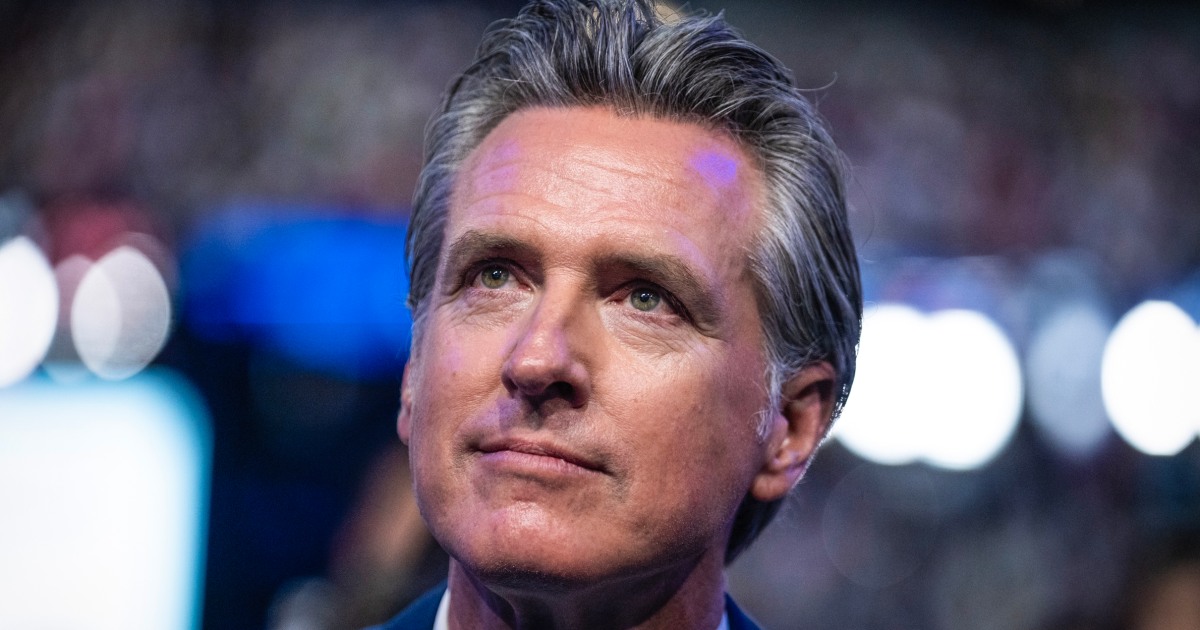

California Governor Gavin Newsom on Tuesday signed two bills aimed at protecting actors and other artists from the unauthorized use of their digital likenesses.

Introduced in the state Legislature earlier this year, the bills specify new legal protections – both during the life of artists and after death – around the digital replication of their image or voice.

Newsom, who governs a state that hosts the largest entertainment market in the world, signed them into law amid growing concern about the impact of artificial intelligence on the work of artists.

“We’re talking about California being a state of dreamers and doers. A lot of dreamers come to California, but sometimes they’re not well represented,” Newsom he said in a video shared on social media on Tuesday. “And with SAG and this bill I signed, we’re making sure that no one passes their name, image and likeness to unscrupulous people without union representation or defense.”

He was joined in the video by Fran Drescher, the president of SAG-AFTRA, which represents about 160,000 media professionals. The union strongly advocated for the new laws, with other protections for actors and other artists surrounding the AI.

Drescher said the legislation could “speak to people all over the world who feel threatened by AI.”

“And even if there are smart people who come up with these inventions, I don’t think they think about everything that will happen when people don’t have a place to earn a living and continue to feed their families,” he said. in the video.

One of the laws, AB 2602, protects artists from being bound to contracts that allow the use of their digital voices or images, either in place of their own work or to train AI.

Such clauses will be considered unfair and against public policy, according to the law, which applies to past and future contracts. It also requires someone who has such a contract to notify the other party in writing by February 1 that the clause is no longer valid.

The other law, AB 1836, specifically protects digital likenesses as part of artists’ posthumous right of publicity, a legal right that protects people’s identities from unauthorized commercial use.

It allows rights holders for deceased personalities to request whether digital replicas of them are used without permission in films or sound recordings. Rights holders would be entitled to at least $10,000 or the amount of actual damages caused by unauthorized use, whichever figure is greater.

It is a change that strengthens the rights of interpreters in an area that is already subject to legal conflict. Drake this year pulled a diss track he promoted online that used an AI-generated version of the late Tupac Shakur’s voice after Shakur’s estate threatened to subpoena him.

The future of generative AI — and how it might be used to replace human labor — was a sticking point for actors and writers during last summer’s Hollywood strikes.

Earlier this year, SAG-AFTRA negotiated a controversial deal with an AI voice technology company to allow the consensual licensing of digitally replicated voices for video games. In July, Hollywood’s video game industry voted to lash out over continued AI concerns.

In recent years, the likenesses of actors like Tom Hanks and Scarlett Johansson, along with a host of celebrities and influencers, have been used in non-consensual deepfake ads.

Many artists and tech companies are also waiting to see if Newsom will sign a third bill, SB 1047, which would require AI developers to meet certain safety and security guidelines before training their AI models.

The legislation received support from SAG-AFTRA, non-profit advocacy groups and the likes of actor Mark Ruffalo, who posted a video on X last weekend urging Newsom to sign.

“All the big tech companies and the billionaire tech guys in Silicon Valley don’t want to see this happen, which should make everyone start seeing why immediately,” Ruffalo said in his video. “But AI is about to explode, and in a way that we have no idea what the consequences are.”